Hierarchical Bias-Driven Stratification for Interpretable Causal Effect Estimation

A tree-based method for stratifying data while optimizing for balancing in order to obtain interpretable effect estimation and abstention in non-overlapping regions.

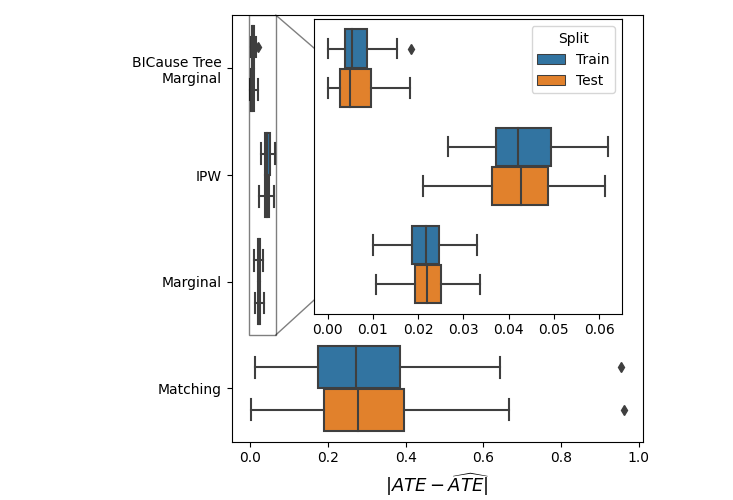

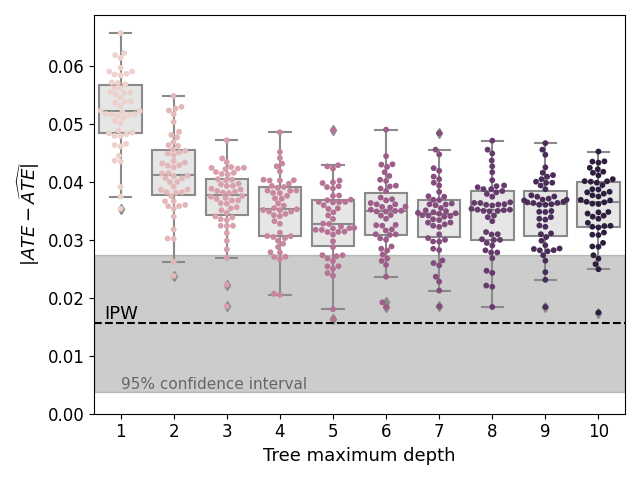

Interpretability and transparency are essential for incorporating causal effect models from observational data into policy decision-making. They can provide trust for the model in the absence of ground truth labels to evaluate the accuracy of such models. To date, attempts at transparent causal effect estimation consist of applying post hoc explanation methods to black-box models, which are not interpretable. Here, we present BICauseTree: an interpretable balancing method that identifies clusters where natural experiments occur locally. Our approach builds on decision trees with a customized objective function to improve balancing and reduce treatment allocation bias. Consequently, it can additionally detect subgroups presenting positivity violations, exclude them, and provide a covariate-based definition of the target population we can infer from and generalize to. We evaluate the method’s performance using synthetic and realistic datasets, explore its bias-interpretability tradeoff, and show that it is comparable with existing approaches

Citation

@article{ter2024hierarchical,

title={Hierarchical Bias-Driven Stratification for Interpretable Causal Effect Estimation},

author={Ter-Minassian, Lucile and Szlak, Liran and Karavani, Ehud and Holmes, Chris and Shimoni, Yishai},

journal={arXiv preprint arXiv:2401.17737},

year={2024}

}